Liveclicker offers the ability to A/B-test any of the same Liveclicker element in the platform. The winner can be determined based off a clicks threshold, opens threshold or statistical significance.

Continuous A/B-testing is available for ongoing campaigns to continue testing your content even after the initial tests have been sent to continue optimizing your creative.

Note: You need to set up the Subscriber ID when using A/B testing. Without proper Subscriber IDs set up for your elements, your A/B testing analytics may be off substantially due to the fact that Liveclicker cannot reliably correlate some impressions (removed clickthroughs) to an original A/B test campaign. This is especially true for Gmail, where the Google Image Proxy completely prevents clickthroughs from being correlated to opens.

There are 2 ways to set up an A/B test:

-

Within a targeting rule, to determine the winning version for that specific rule and audience segment.

-

Within an element, to determine the winning version for all recipients who do not comply with any of the targeting rules or when no targeting rules have been defined.

Note: You can use multiple A/B tests and combine both methods in one campaign.

A/B testing on the level of the Liveclicker element

AB testing on element level allows you to perform a 50/50 test of your Liveclicker content to every opener in your campaign that does not comply with any of the targeting rules in your campaign.

Example: Let's say that you want to test two banner images in your email campaign to see which one gets a higher click-to-open rate with no other segmenting, you can do that easily with a simple AB test.

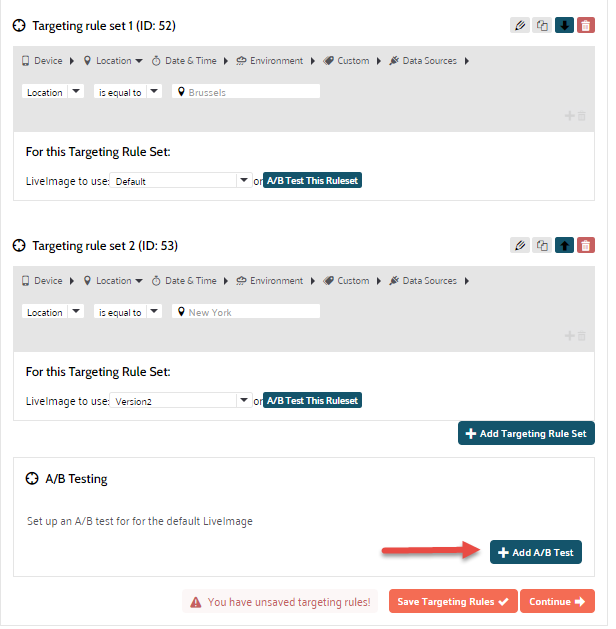

1. To configure this type of A/B test, click the Add A/B Test + button at the bottom of the Targeting rules section:

This will set up the A/B test for the default image, displayed when none of the targeting rules apply.

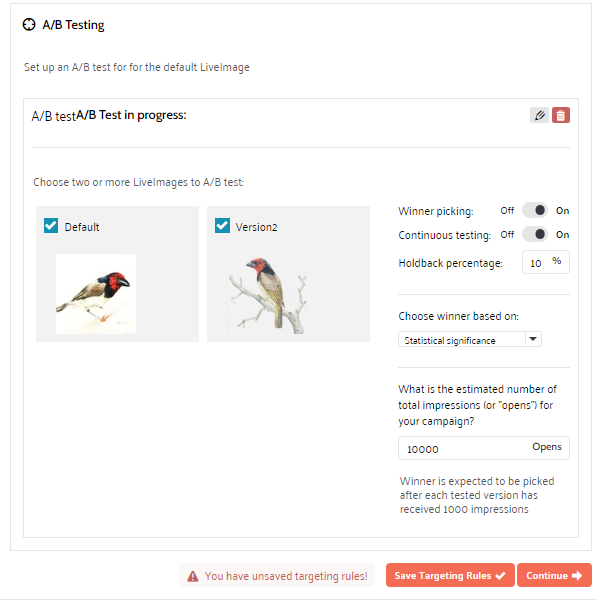

2. The different versions of the LiveImage are loaded together with the A/B test options:

3. Select the versions you want to test and define the testing options on the right.

4. When done, click Continue. The winning version of the A/B test will be shown to the remainder of the audience that does not comply with any of the targeting rules.

A/B testing within a targeting rule

A/B testing in a targeting rule allows you to set up A/B tests for a specific segment of recipients and determine the final version to be shown to the remainder of the audience in that segment.

Example: Let's say that you want to test two different banner images, but only for iPhone users in Chicago. You can do so by setting up an AB test for an individual ruleset.

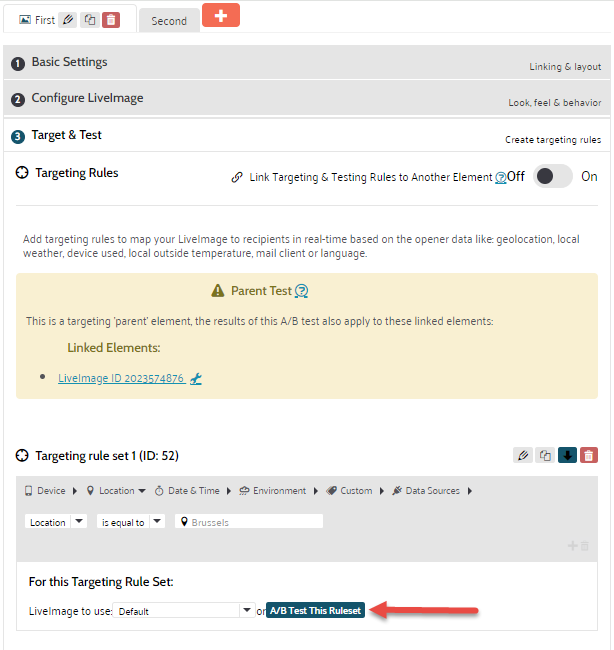

1. To set up an A/B test for the rule, go to the targeting rule, and click A/B test this rule to configure the A/B test and define the outcome of the test.

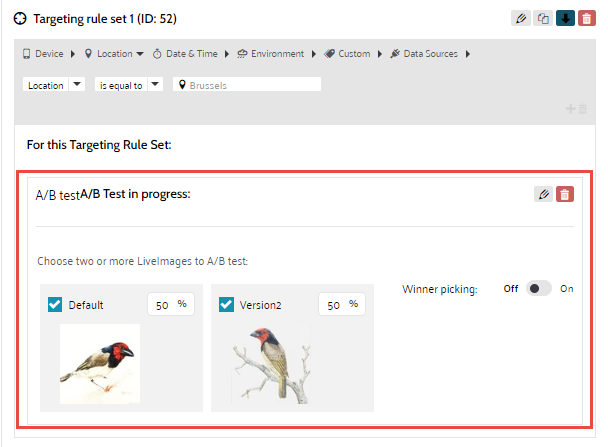

2. The different versions are loaded together with the testing options on the right:

3. Select the versions that need to be included in the test and define the testing options.

Note: When the default version of an element is included in the test, only the impressions by recipients in your segment will be taken into account for the A/B test and in the A/B test reports. Any impressions outside the segment of recipients defined by the targeting rules are not considered.

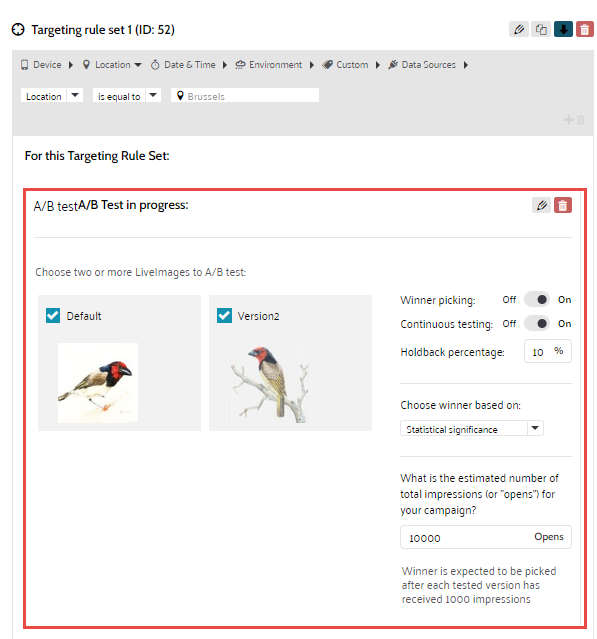

4. Next, define if a winner needs to be picked automatically or not, by toggling the option Winner picking ON.

5. Define the following settings if an automatic winner needs to be selected:

Continuous testing — This option can be used for triggered campaigns that are sent out periodically. With this option, you can keep testing over time, meaning, after the winner has been chosen for a test.

Holdback percentage — This option is used in combination with continuous testing. It defines the percentage of openers for which the testing will continue. If at any point, the non-winning version starts to beat the existing winner with continuous testing on, Liveclicker will automatically switch out the winner with the new winning version.

Example: Let's say that you set the holdback percentage to 10% after your winner is chosen, then this is what will transpire:

The winning version will be delivered to 90% of opens.

10% of the opens will continue to be A/B tested

Choose winner based on —

-

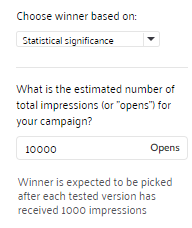

statistical significance: A statistical algorithm is used to define the winner. For this, the system requires an estimated amount of email opens.

Technical note: To determine a winner, Liveclicker uses the standard statistical formula for determining a sample size from a known size of a population that is assumed to have a normal (bell curve) distribution, where the confidence level is 95% and the margin of error is 3%. Take into account that the platform records the number of clicks in order to decide the winner.

The "known size of a population" is the estimated amount of email opens provided by the user.

The "sample size" is the number of opens we use before calculating the winner; you can see an estimate of this number below the estimated number of opens.Example: Let's say that your campaign list is 100,000 and your average open rate is 10%, then you could use a 1:1,000 ratio and put 10,000 as your estimated amount of opens.

-

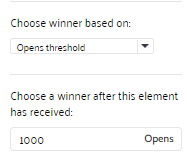

Open threshold: The exact number of opens received for the element before a winner is chosen. The version with the highest click to open rate is chosen

-

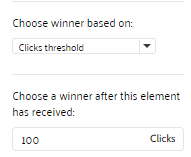

Click threshold: The exact number of clicks required, before the winner is chosen. The version with the highest click to open rate is chosen.

6. You have now finished the A/B test for your targeting rule.