The Connect product supports AWS S3 as a destination. To facilitate that connection, we have systems and processes in place to ensure that the connection between Sailthru and your S3 bucket are secure. The content here will guide you through several bucket policy variations depending on your needs. There is a joint bucket policy (for Data Exporter and Event Stream); an Event Stream only bucket policy; and a Data Exporter connection advisory. Additional FAQ documentation about security and encryption matters is located at the bottom of this page.

Bucket Policy variations

The Amazon S3 bucket policy feature allows you to configure access for specific systems and users to access your bucket. We offer three policy variations for the different combinations of the Connect data features. The policy variations are:

- A joint bucket policy for both Event Stream and Data Exporter.

- A bucket policy specifically for Event Stream.

- A bucket policy specifically for Data Exporter.

Note that in all of these cases, we specifically recommend securing your bucket. You should review your company's policies with regards to data security and for this product, block public access. More information can be found on Amazon's documentation, or by getting in touch with your AWS representative.

Joint Bucket Policy for Event Stream and Data Exporter

Note: Remember to replace [yourbucketname] in the code with your S3 bucket path in the two 'Resource' lines. Be sure to also omit the square braces in the sample.

- Configure a dedicated S3 bucket to Sailthru. This bucket can be located in any AWS region.

- Take note of the S3 bucket name so that, after step 3, you can proceed to this step.

- Apply a bucket policy giving Sailthru the appropriate write access. Below these instructions is a sample policy with an export bucket called

[yourbucketname](this can be copy-and-pasted, and you only need to change the bucket name to your own and remove the square braces). - Contact Support with the bucket name and a path to export Data Exporter file outputs.

Bucket policy example

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "bucket permissions",

"Effect": "Allow",

"Principal": {"AWS": ["arn:aws:iam::728023223659:user/integ","arn:aws:iam::099647660399:root"]},

"Action": [

"s3:GetBucketLocation",

"s3:ListBucket" ],

"Resource": "arn:aws:s3:::[yourbucketname]" },

{

"Sid": "key permissions",

"Effect": "Allow",

"Principal": {"AWS": ["arn:aws:iam::728023223659:user/integ","arn:aws:iam::099647660399:root"]},

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject",

"s3:PutObjectAcl",

"s3:GetObjectAcl" ],

"Resource": "arn:aws:s3:::[yourbucketname]/*" }

]

}

Event Stream only policy for S3

Note: Remember to replace[yourbucketname] in the code with your S3 bucket path in the two 'Resource' lines. Be sure to also omit the square braces in the sample.

- Configure a dedicated S3 bucket to Sailthru. This bucket can be located in any AWS region.

- Take note of the S3 bucket name so that, after step 3, you can proceed to this step.

- Apply a bucket policy giving Sailthru the appropriate write access. Below is a sample policy with an export bucket called

[yourbucketname](this can be copy-and-pasted, and you only need to change the bucket name to your own and remove the square braces).

Bucket policy example

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "bucket permissions",

"Effect": "Allow",

"Principal": { "AWS": "arn:aws:iam::099647660399:root" },

"Action": "s3:ListBucket",

"Resource": "arn:aws:s3:::[yourbucketname]"

},

{

"Sid": "object permissions",

"Effect": "Allow",

"Principal": { "AWS": "arn:aws:iam::099647660399:root" },

"Action": [ "s3:PutObject", "s3:DeleteObject" ],

"Resource": "arn:aws:s3:::[yourbucketname]/*"

}

]

}Data Exporter only policy for S3

Permission the Sailthru user account to be able to write to a bucket that is owned by your company as per AWS documentation. You will use the Sailthru public S3 account principal: arn:aws:iam::728023223659:user/integ http://docs.aws.amazon.com/AmazonS3/latest/dev/example-walkthroughs-managing-access-example2.html After setting up the bucket with the account principal, contact Support with the bucket name and a path to export Data Exporter file outputs. For more about the Data Exporter dataset, see our Getstarted documentation here.Configuring Connect Event Stream to use your S3 Bucket

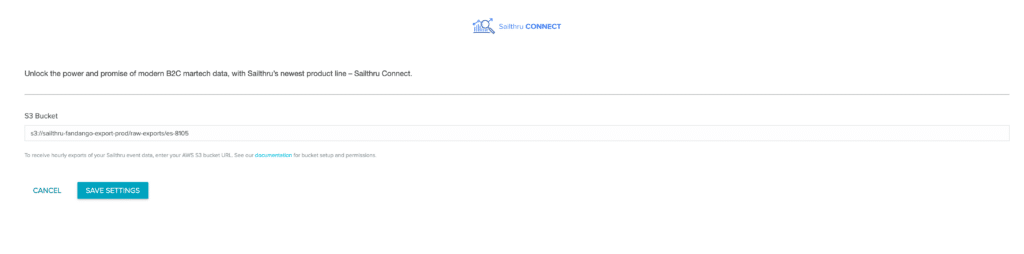

Log in to My Sailthru and configure your S3 bucket in the Settings > Setup > Integrations view. Enter your S3 bucket name in the "Sailthru Connect S3 Bucket".

After the connection is set

Once you've saved your S3 bucket, and having implemented the proper policy, two processes will start.- Data will start flowing within 2 hours to stream event data to your bucket. After the stream starts, more data will be streamed every hour.

- A connection validation will be run intermittently. We will upload-then-delete a file named

_ACCESS_CONFIRMATIONwhich can be safely ignored. This will be a top-level file, located ats3://example_bucket/_ACCESS_CONFIRMATION.

Frequently Asked Questions

Q: Is the transmission of data secure between Sailthru and my bucket?

A: Yes, the transmission of data is indeed secure. We use Amazon's S3 sync method and use SSL by default so that data is encrypted while in transit.

Q: Does Sailthru support server-side encryption? How?

A: Sailthru supports a method documented by Amazon so that your data is encrypted at rest by default. To do this, Sailthru does not rely on the bucket policy; instead, default encryption set up via the console is the method we've had success with, for several of our Connect customers. From Amazon's documentation:

Amazon S3 default encryption provides a way to set the default encryption behavior for an Amazon S3 bucket. You can set default encryption on a bucket so that all objects are encrypted when they are stored in the bucket. The objects are encrypted using server-side encryption with either Amazon S3-managed keys (SSE-S3) or AWS Key Management Service (AWS KMS) customer master keys (CMKs).

Q: How would I get started with default encryption?

A: To use default encryption with Connect if encrypting using KMS:

- Use the fully qualified ARN for the KMS key when setting up default encryption. By default AWS will look for the KMS key in the caller's (Sailthru) account if no other account is specified.

- The KMS key must have a policy attached granting Sailthru certain permissions needed for multipart uploads. The permissions needed are:

- "Decrypt",

- "DescribeKey",

- "Encrypt",

- "GenerateDataKey",

- "GenerateDataKeyWithoutPlaintext",

- "ReEncryptFrom",

- "ReEncryptTo"

Q: Should I configure my S3 bucket that is receiving Connect data such that public access is available/enabled?

A: No.

Q: What encryption should I use for my S3 bucket?

A: We recommend you use the default encryption (SSE - S3).